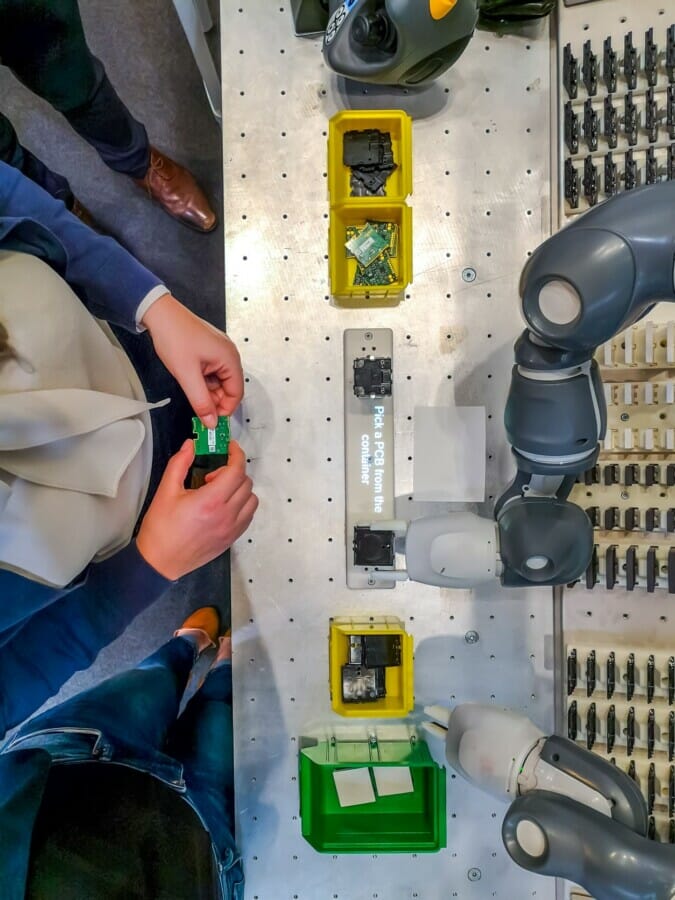

The use of pick-and-place robots is no new territory. However, these robots were previously not capable of precisely picking and placing parts from a bin of assorted parts into a machine. Due to the complex nature of final assembly lines, these robots were considered incapable of replicating humans’ dexterity in conducting such a task, but that is changing. Here Neil Bellinger, head of EMEA at automation parts supplier EU Automation, discusses the challenges when choosing bin-picking cobots.

Using a bin-picking vision system allows for many benefits in the workplace, such as reducing handling of parts, implementing adaptive automation of robots, allowing more suitable use of operators’ time, as well as lowering the operators’ risk of repetitive strain injury. However, this technology still has a long way to go before it surpasses the effectiveness of using humans for such a task.

One of the bin-picking cobots still need to overcome comes from the arrangement of objects within a container. Bin-picking cobots can struggle to pick items that are small or randomly arranged within a bin, making them difficult to distinguish. To overcome this, the cobots would require 3D vision systems with high dynamic range, high resolution and precision to try and create a true-to-life picture for the robots when picking.

However, even with these specifications addressed there is still the challenge of shiny and reflective pieces. 3D vision systems can often struggle to get good 3D data on reflective or shiny objects. This is because reflections and inter-reflections cause distortion and anomalies in point clouds, meaning the system cannot reliably detect objects.

Another challenge bin-picking systems face is fewer occlusion outliers. This can be caused by wide camera baselines or poor camera placement, which results in shadowing bin edges and potentially hidden small objects. Due to this, vision-based robots can miss the ‘hidden’ items in corners, resulting in lost details. This issue can be fixed with smaller baselines and better camera position to include more optical occlusion.

However, better cameras and camera placements are certainly helpful in improving precision, but might not necessarily fix all the challenges for bin-picking vision systems. For example, bin-picking cobots still struggle with shingled, soft and deformable parts, such as plastic bags or parts of varying heights and shapes that have been shingled.

These cobots can also experience interference that affects performance, such as movement to the cobot that would affect the distance calculations resulting in the cobot hitting the sides of the bin or other parts. This means the cobots are not fully autonomous and still require human supervision in case of errors.

Examples of high quality bin-picking cobots

Despite the challenges involved in creating a bin-picking cobot, there are many models currently on the market that have improved the robots vision and occlusion issues. One example is the Omron TM Integrated Vision cobot, which is designed to support industrial grade pattern recognition, object positioning and feature identification.

A successful example of bin-picking vision system is the Zivid Two 3D camera that is designed for bin-picking. The Zivid Two has an ultra-compact depth sensor, with a small baseline and more optimal occlusion performance.

It is undeniable that bin-picking cobots have come a long way since the 1990s, when this technology emerged, but there is still some way to go before they surpass the need for humans or human supervision. However, with the pressure still there to make up for skills and staff shortages, this technology is likely to evolve to match humans’ skills in the near future. Meanwhile, current bin-picking vision systems can still help reduce injury, save delicate parts from overhandling, allow for quality control and more.

To keep up to date with the latest news impacting the manufacturing industry, visit the EU Automation Knowledge Hub.